By: Arif Khan.

As we aware that in present programming world applications became more complex, application logs can be very useful, not only while debugging but to get insight of applications issues and performance. Python provide a bundle of free to use libraries logging is one of them, that provide most of the basic logging features, a log message can bring a lot of useful information about running process or thread. This post is not meant to be a final wordict on the Python logging but rather a getting started document that introduces some logging concepts in Python.

Logging Levels in Python

By default there are six logging levels in Python; each level is associated with the log severity number.

NOTSET=0

DEBUG=10

INFO=20

WARN=30

ERROR=40

CRITICAL=50

All the levels are straightforward (DEBUG < INFO < WARN ) except NOTSET

Python logging formatting

Log formatter enriches a log information message by adding context info to it. It can be useful to know when the log is sent, where (Python file, line number, method, etc.), and additional context such as the thread or process (it can be very useful while debugging a multithreaded application).

When a log message “MyLog” is sent via log formatter:

"%(asctime)s — %(name)s — %(levelname)s — %(funcName)s:%(lineno)d — %(message)s"It will turned into following.

2021-23-0712:44:41,285 - abc - WARNING - <module>:1– MyLog

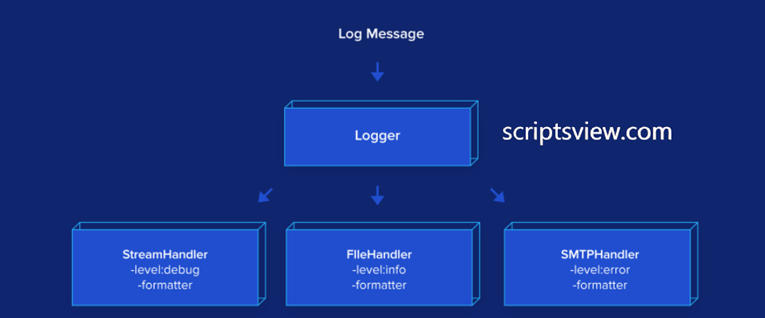

Python logging handler

The log handler effectively writes/displays a log: it displays in the console via StreamHandler, and in a file via FileHandler, or even by sending an email via SMTPHandler.

There are two important fields in each log handler:

- Formater it adds context information to a log.

- Log Level it filters out logs whose levels are inferior. So a log handler with the INFO level will not handle DEBUG logs.

The standard library provides a handful information about handlers that should be enough for common use cases.

The most common ones are

StreamHandler and FileHandler:

console_handler = logging.StreamHandler()

file_handler = logging.FileHandler("filename")

Python Logger

Python Logger most often used directly in the code which also most complicated. New logger can be obtained by

newlogger = logging.getLogger("newlogger")Three main logger fields

- Propagate: it decides that a log should be propagated to the logger’s parent. Default value is True.

- Level: Just like the log handler level, the logger level is used to filter out less important logs. Except, unlike the log handler, the level is only checked at the child logger, once the log is propagated to its parents, the level will not be checked. This is an un-intuitive behavior.

- Handlers: The list of handlers that a log will be sent to when it arrives to a logger. This allows a flexible log handling for example, you can have a file log handler that logs all DEBUG logs and an email log handler that will only be used for CRITICAL logs. In this regard, the logger-handler relationship is similar to a publisher-consumer one: A log will be broadcast to all handlers once it passes the logger level check.

A logger is unique by name, if a logger with the name “newlogger” has been created, the consequent calls of logging.getLogger("newlogger ") will return the same object:

assert id(logging.getLogger("newlogger ")) == id(logging.getLogger("newlogger "))As loggers have a hierarchy. Root logger is on the top of the hierarchy, it can be accessed by logging.root. This logger is called when methods like logging.debug() is used. WARN is the default root log level, so every log with lower level will be ignored. Default handler will be created the first time a log with a level greater than WARN is logged. Using the root logger directly or indirectly via methods like logging.debug() is generally not recommended.

When a new logger is created, by default its parent will be set to the root logger

lab = logging.getLogger("x.y")

assert lab.parent == logging.root

However, the logger uses the dot notation, meaning that a logger with the name “x.y” will be the child of the logger “x.” However, this is only true if the logger “x” has been created, otherwise “x.y” parent is still the root.

la = logging.getLogger("x")

assert lab.parent == la

If the log level is lower than logger level, the log will be ignored, it uses its effective level instead of the actual level. The effective level is the same as logger level if the level is not NOTSET, i.e., all the values from DEBUG up to CRITICAL; however, if the logger level is NOTSET, then the effective level will be the first ancestor level that has a non-NOTSET level.

By default, a new logger has the NOTSET level, and as the root logger has a WARN level, the logger’s effective level will be WARN. So even if a new logger has some handlers attached, these handlers will not be called unless the log level exceeds WARN:

newlogger = logging.getLogger("newlog ")

assert newlogger.level == logging.NOTSET # new logger has NOTSET level

assert newlogger.getEffectiveLevel() == logging.WARN # and its effective level is the root logger level, i.e. WARN

# attach a console handler to newlogger

console_handler = logging.StreamHandler()

newlogger.addHandler(console_handler)

newlogger.debug("debug") # nothing is displayed as the log level DEBUG is smaller than newlog effective level

newlogger.setLevel(logging.DEBUG)

newlogger.debug("debug message") # now you should see "debug message" on screen

By default, the logger level will be used to decide of the log passes: If the log level is lower than logger level, the log will be ignored.

Python Logging Best Practices

The logging module is very handy, but it contains some quirks that can cause long hours of headache for even the best developers. In my opinion below are the best practice for using this module:

- Just Configure the root logger but don’t use it in your code—e.g., don’t call a function like

logging.info(), which actually calls the root logger behind the scene. If you want to catch error messages from libraries you use, make sure to configure the root logger to write to file, for example, to make the debugging simple. By default, the root logger only outputs tostderr, so the log can get lost easily. - To use the logging, make sure to create a new logger by using

logging.getLogger(logger name).

import logging

import sys

from logging.handlers import TimedRotatingFileHandler

FORMATTER = logging.Formatter("%(asctime)s — %(name)s — %(levelname)s — %(message)s")

LOG_FILE = "appname.log"

def get_console_handler():

console_handler = logging.StreamHandler(sys.stdout)

console_handler.setFormatter(FORMATTER)

return console_handler

def get_file_handler():

file_handler = TimedRotatingFileHandler(LOG_FILE, when='midnight')

file_handler.setFormatter(FORMATTER)

return file_handler

def get_logger(logger_name):

logger = logging.getLogger(logger_name)

logger.setLevel(logging.DEBUG) # better to have too much log than not enough

logger.addHandler(get_console_handler())

logger.addHandler(get_file_handler())

# with this pattern, it's rarely necessary to propagate the error up to parent

logger.propagate = False

return logger

After you can create a new logger and use it:

mylogger = get_logger("modulename")

mylogger.debug("debug message")

- Use RotatingFileHandler classes, such as the TimedRotatingFileHandler used in the example instead of FileHandler, as it will rotate the file for you automatically when the file reaches a size limit or do it every day.

- Catch error logs automatically use tools like Sentry, Airbrake, Raygun, etc. This is useful in the context of a web application, where the log can be very verbose and error logs can get lost easily. Another advantage of using these tools is that you can get details about variable values in the error so you can know what URL triggers the error, which user is concerned, etc.